posted 02-13-2013 02:13 AM

Everyone wants good EDA data. Nobody wants bad data.While we are all contemplating utter silence due to insuilts and threats of lawsuits, we are at risk for loosing the main value of this forum aside from friendships - and loosing an opportunity for important discussion about how the EDA works, and what to expect from it.

Different EDA modes are a sometimes acute topic - especially when they disagree. Most of the time they will agree. But nothing is perfect, and so sometimes they may be observed to disagree. This may not be an issue of defectiveness but of imperfectness. EDA data, like the polygraph as a whole, seems to be very good but still imperfect. I know I'm ranting on that only because others are ranting on less sensible messages.

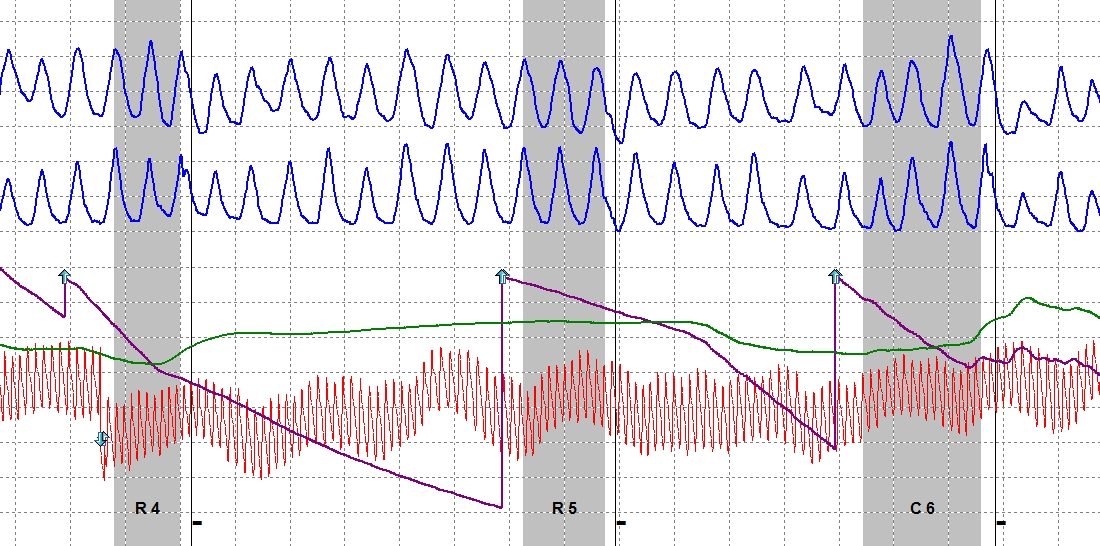

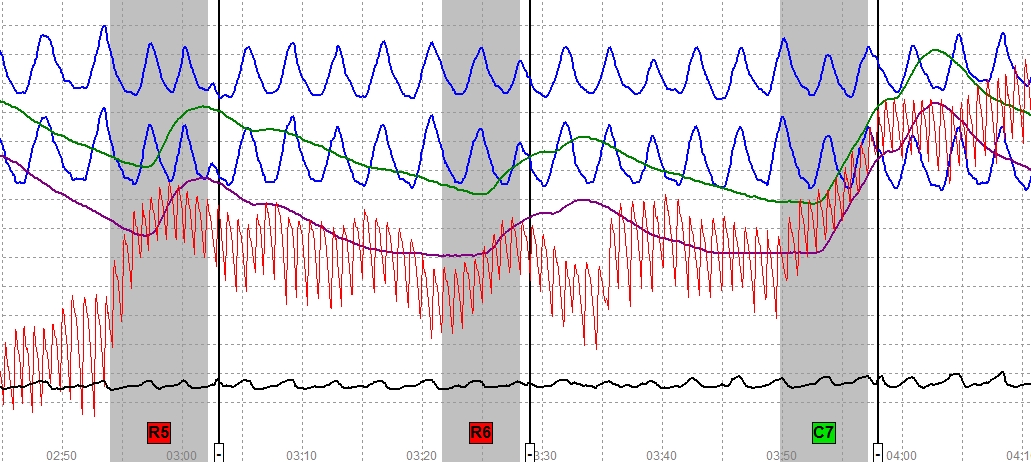

You can see in the first graphic (reposted below from the other thread) that C4 shows a greater reaction than R6 using the Auto EDA (green), while R6 shows a greater reaction than C4 using the Raw EDA (purple). If you use the Federal bigger-is-better rule, reliant upon visibly discernable differences, then you can imagine what happens to the numerical score.

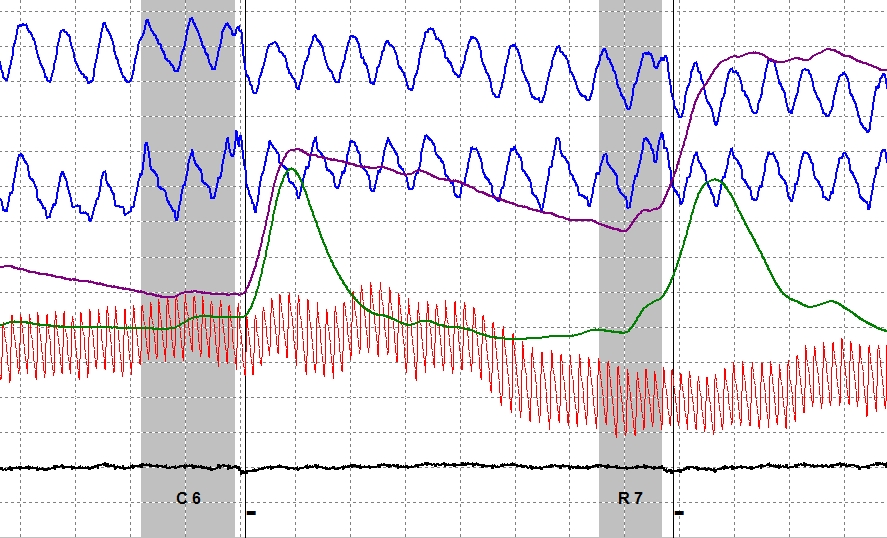

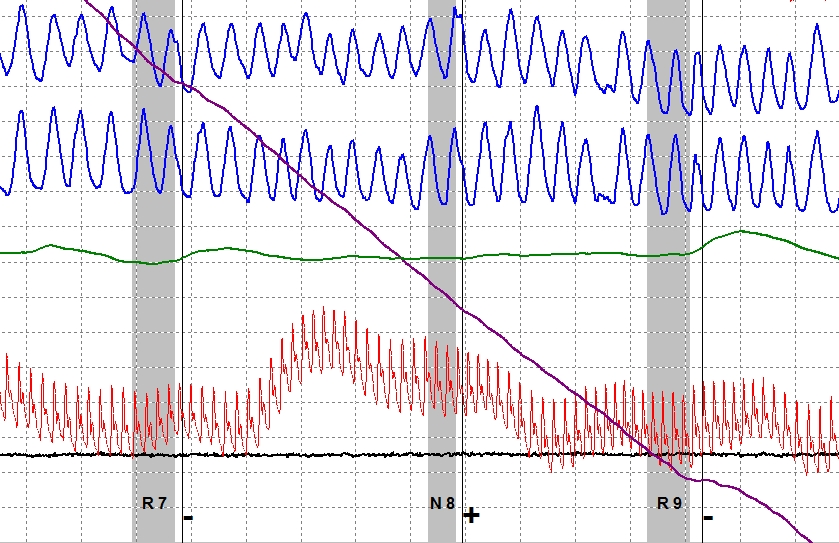

In the second graphic (also reposted below from the other thread) C6 and R7 appear to have nearly equal vertical response magnitude using the Auto EDA (green) though you will see that C6 is slightly larger if you look closer (as they like to say). Using the Raw EDA you will notice that R7 is greater than C6.

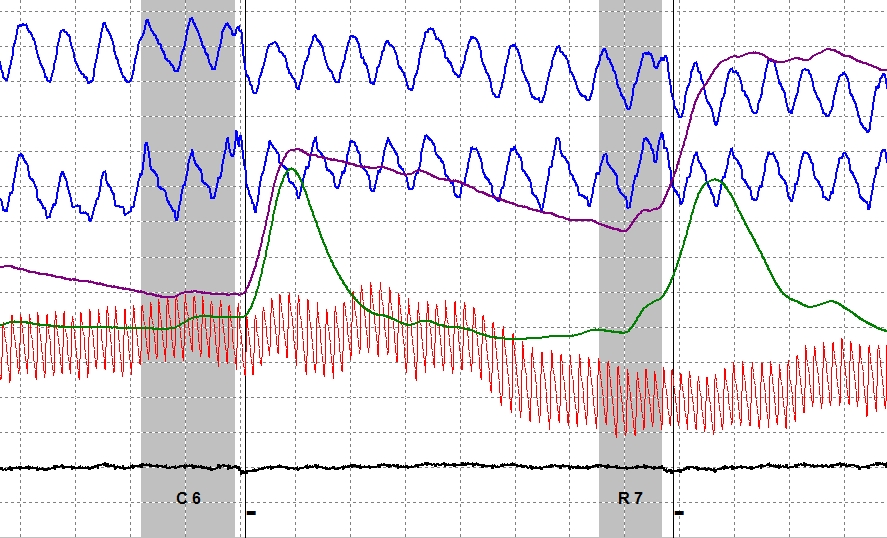

Here is another.

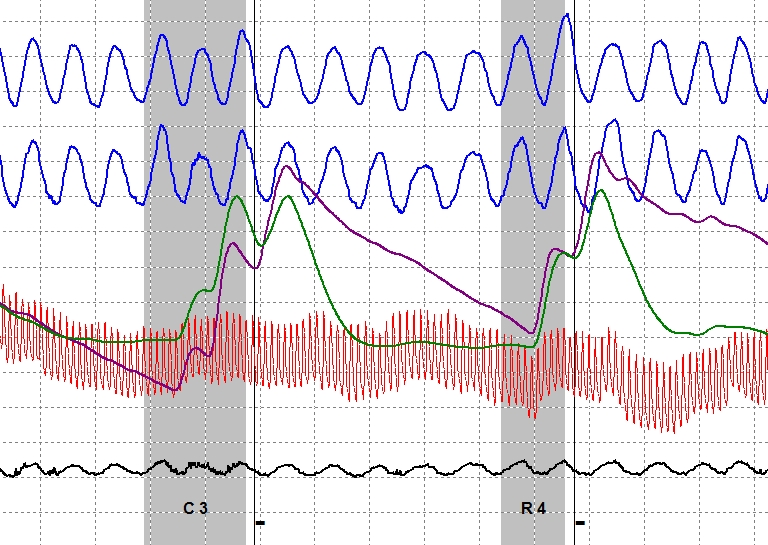

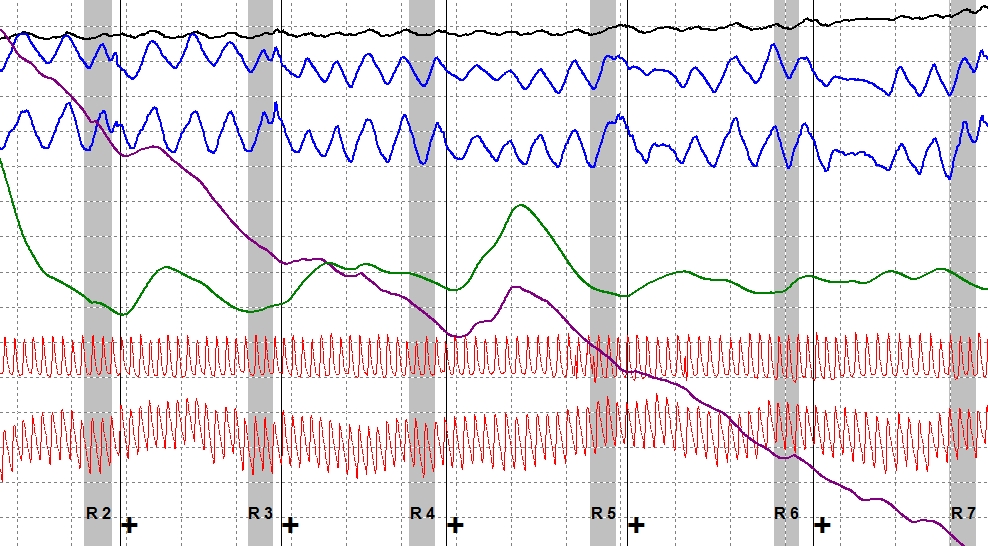

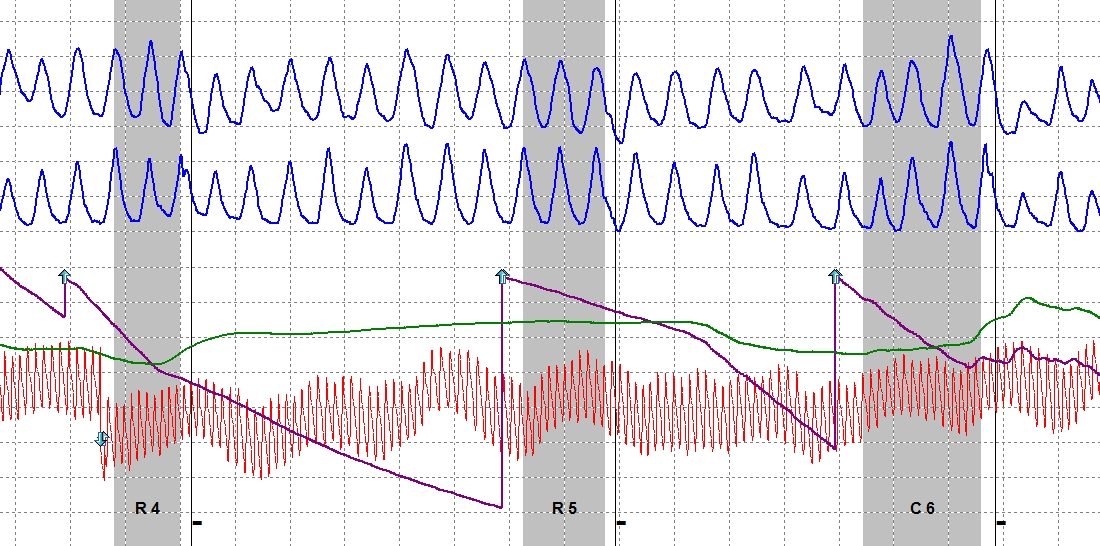

You can in this one that C3 and R4 are largely equivalent using the Auto EDA (green). Someone with more accute eyesight than me will notice that R4 is actually slightly larger. Using the Raw EDA (purple) C4 is clearly larger than R4.

It is my hope that when we are done being afraid of the truth about EDA signal processing, and done being afraid of lawsuits, and done with misleading marketing strategies, then we can someday have an open and honest conversation about EDA.

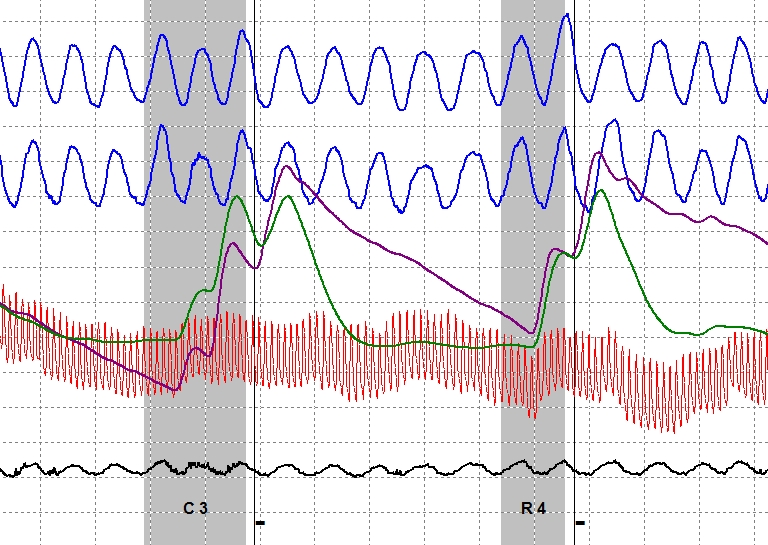

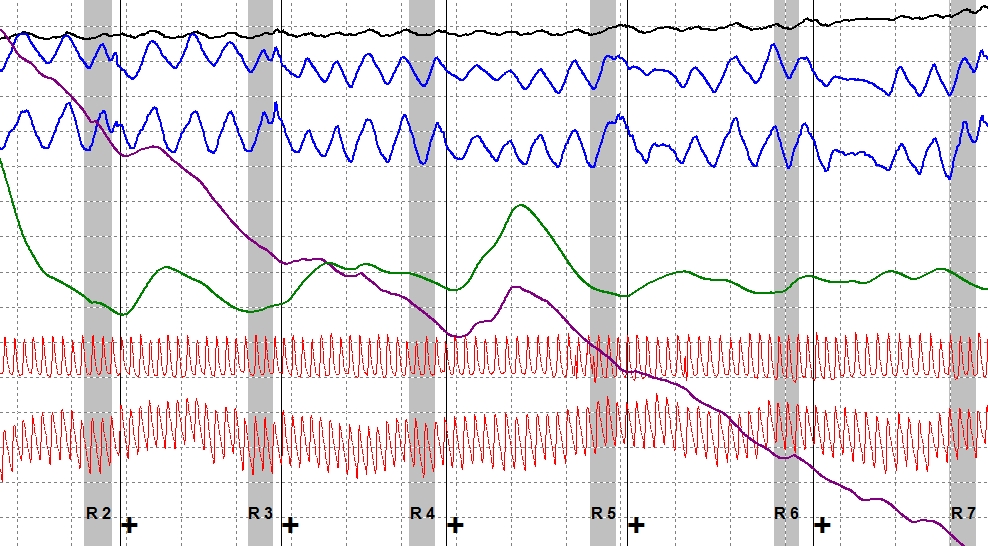

One thing is certain, nobody enjoys plunging EDA.

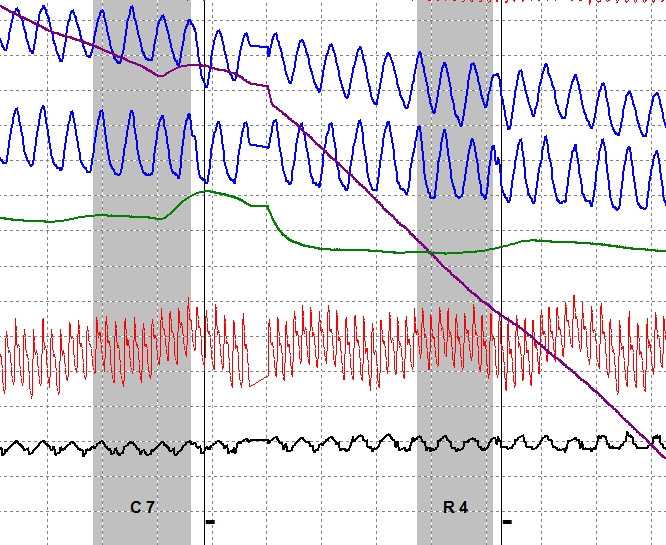

This is a good example of several issues, one of which is that descending EDA data, for some persons, is a known issue that is described in the psychophysiology literature. Another issue here is a sudden, though brief, stoppage of data acquisition. I'm hoping an engineer will explain that to me some day, because I've seen it on other charts too. And finally, look closer at R4 and you'll notice something that mystifies us field examiners until we learn to understand EDA data in terms of a spectrum of frequencies in which descending and tonic activity are very low frequenceis while scorable reactions are slighly higher frequencies and noisy/ugly data are even higher frequencies. In this segment at R4 the Auto EDA shows an upward reaction during the response-onset-window. while the Raw EDA (purple) is persistently going downward at the same time. There is a slight reduction in the rate of downward activity which is actually a change in the frequency spectrum of the wave form (i.e., a reduction of the proportion of very low frequencies and increase in the proportion of higher frequencies). What we don't yet know is whether that observable change is indicative of the kind of increased sympathetic/autonomic nervous system activity that we want to score.

Additionally, you notice the descending pnuemos - I'm not sure if that is caused by a sensor that is sliding around on the examinee or by a faulty component.

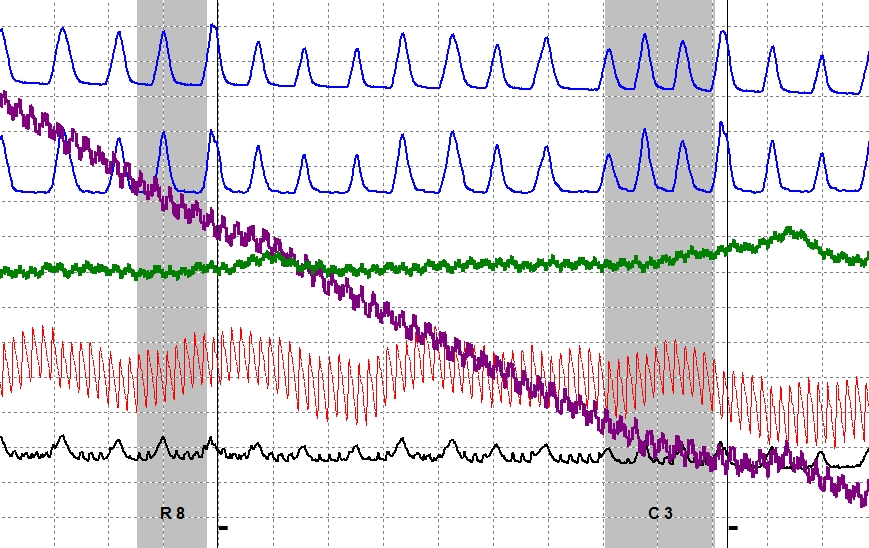

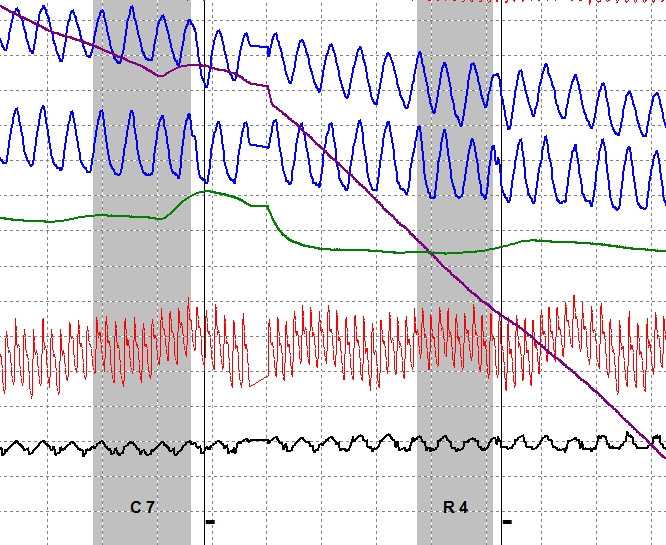

Here is another descending EDA example.

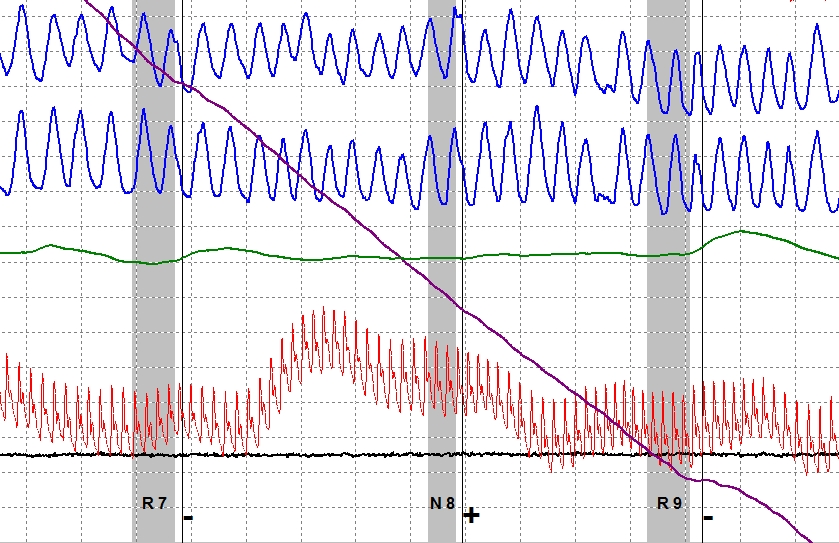

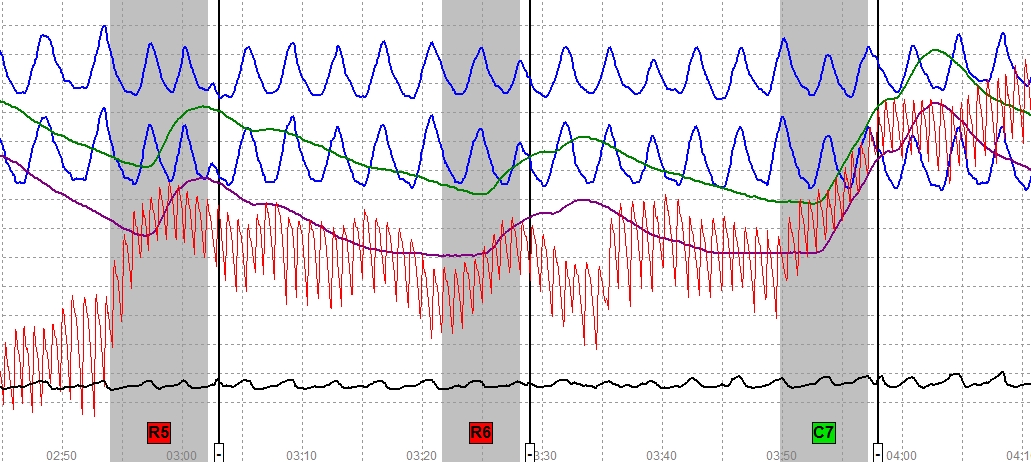

This one you can see the pneumos are more stable. This is an RI (relevant-irrelevant) exam, and you can see the same issue at R7, in which the Auto EDA (green) and Raw EDA (purple) seem to go different directions. There is a reason for this and a way to make sense and understand it, even though it is challenging for us if we are not thinking about the data in the right way.

Sometimes you can still get responses out of descending Raw EDA, and sometimes the Auto will find things we cannot see. Look at closer at R4 (below) and you'll see a good example of the odd phenomena.

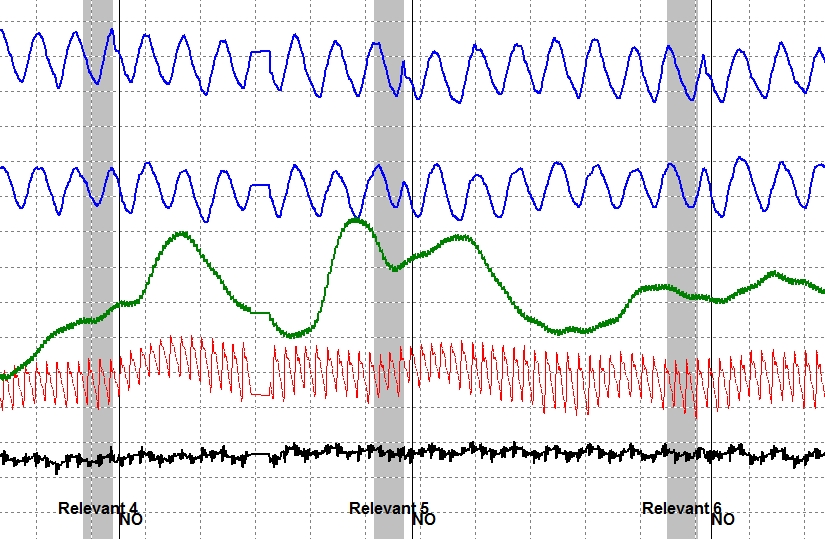

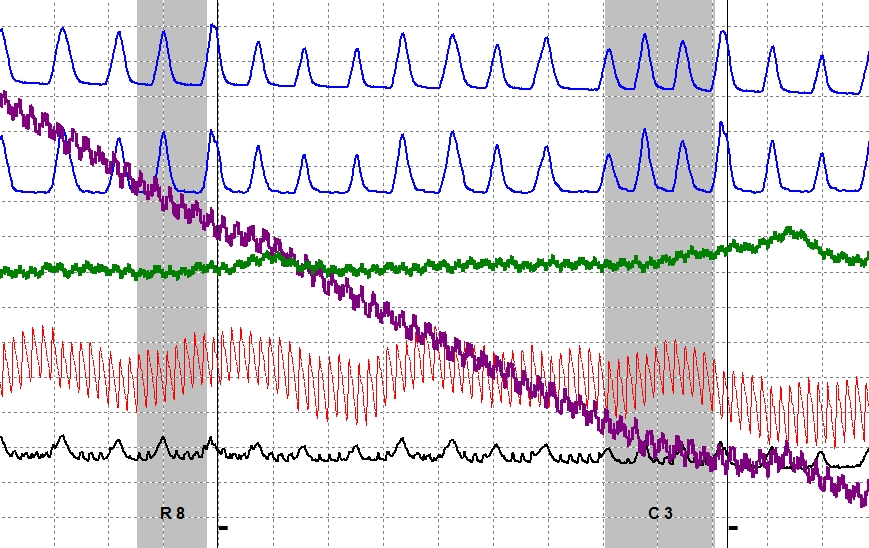

Sometimes the data are fairly obvious even when the EDA data are plunging. This one is a CIT exam.

Other times the EDA data are fuzzy and noisy as if impaired by radio frequency interference perhaps from a cell-phone tower on the office building room, or a high-power transformer or transmitter or something that provides a lot of radio-frequency interferenece. Here the noisy data is combined with the plunging unresponsive data.

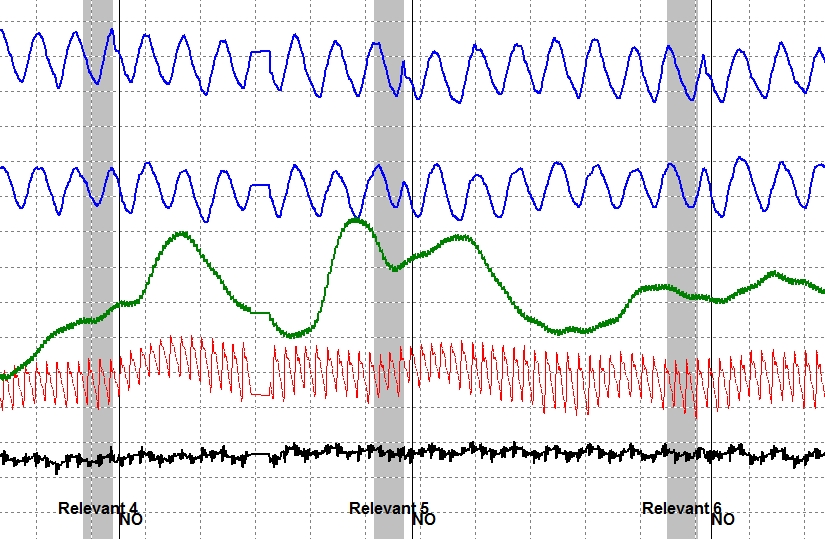

Sometimes the EDA data work despite the fact that it is impaired by lots of fuzzy noise. Three relevants in a row reveals this as another CIT exam.

Look closer (above) and you'll see that data acquisition stoppage again. Where's an engineer when you need one.

Look even closer and you will notice that NONE of these exams was conducted using a Lafayette system.

For reference, here is a graphic of a Lafayette chart.

Look at the details. Don't be confused by the display colors, and don't be confused by marketing hype.

EDA data work fine most of the time, but try not to be surprised when you find something that ain't perfect.

The point of all this is not to point fingers and not to cause trouble. The point is this: lets be realistic and lets be honest about the fact that the instruments seem to work well despite their imperfections and despite their differences.

I used an Axciton for a few years and enjoyed it. I used a Limestone for a few years and enjoyed it. Now I use a Lafayette and I enjoy it too. Sure I would like to try to convince everyone that the Lafayette is the best and the others not so much, but I'm not willing to be dishonest to do that. I'm not interested in more conflict, but I'm also not interested in being bullied and maligned.

There is an ethical issue with the EDA, but it is not a matter of defectiveness or deficiency. The ethical issue is whether instrument manufacturers are going to compete so aggressively that they/we mislead field examiners, trainers, quality control people, and program managers about what to expect from the EDA.

- more later its late and I still have to replace a squealing exhaust inducer fan or my furnace will never let me sleep.

.02

r

------------------

"Gentlemen, you can't fight in here. This is the war room."

--(Stanley Kubrick/Peter Sellers - Dr. Strangelove, 1964)

Disclaimer: the views and opinions expressed herein are those of the author and not the LIC or the APA.

Polygraph Place Bulletin Board

Polygraph Place Bulletin Board

Professional Issues - Private Forum for Examiners ONLY

Professional Issues - Private Forum for Examiners ONLY

EDA

EDA